Our Solution

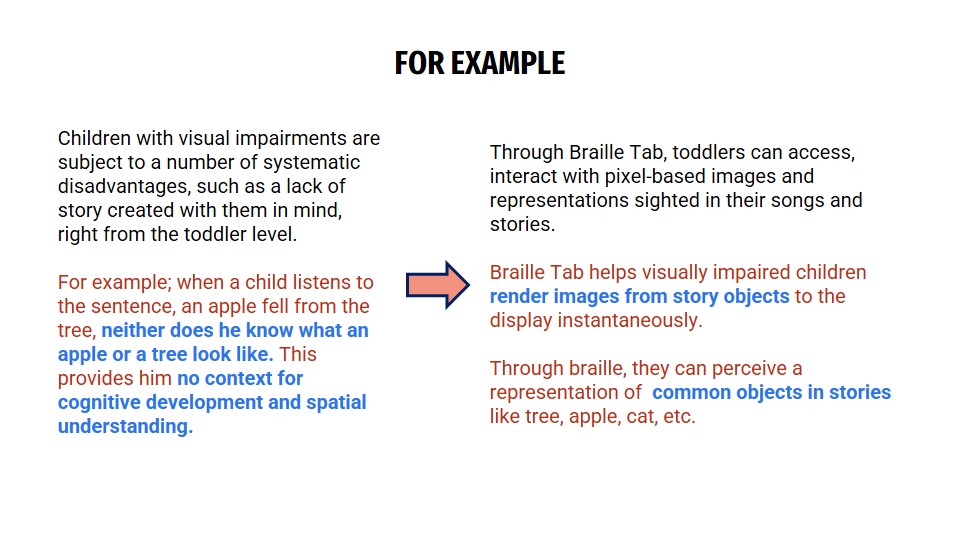

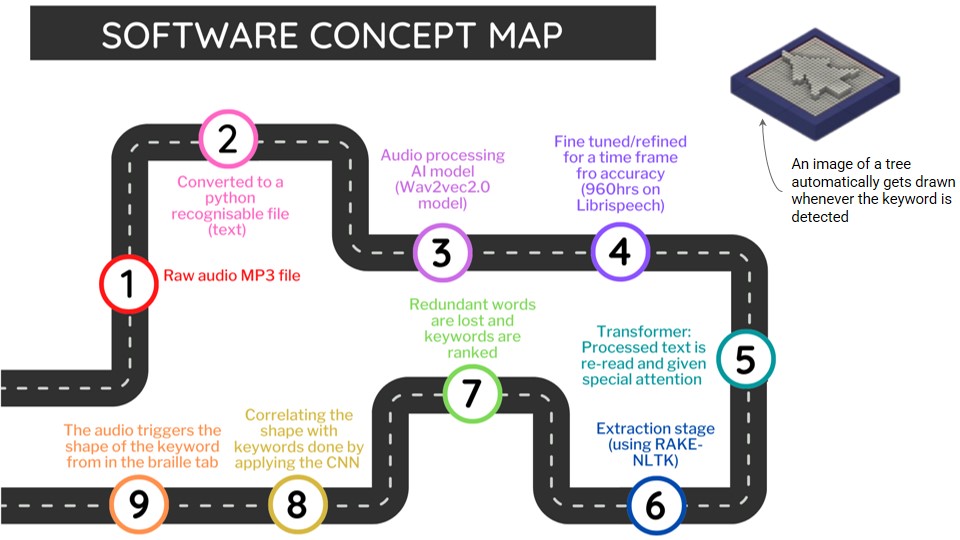

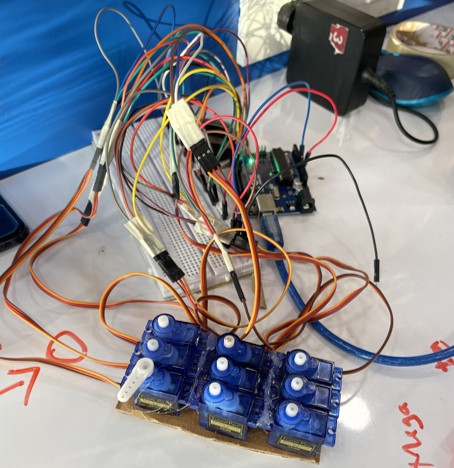

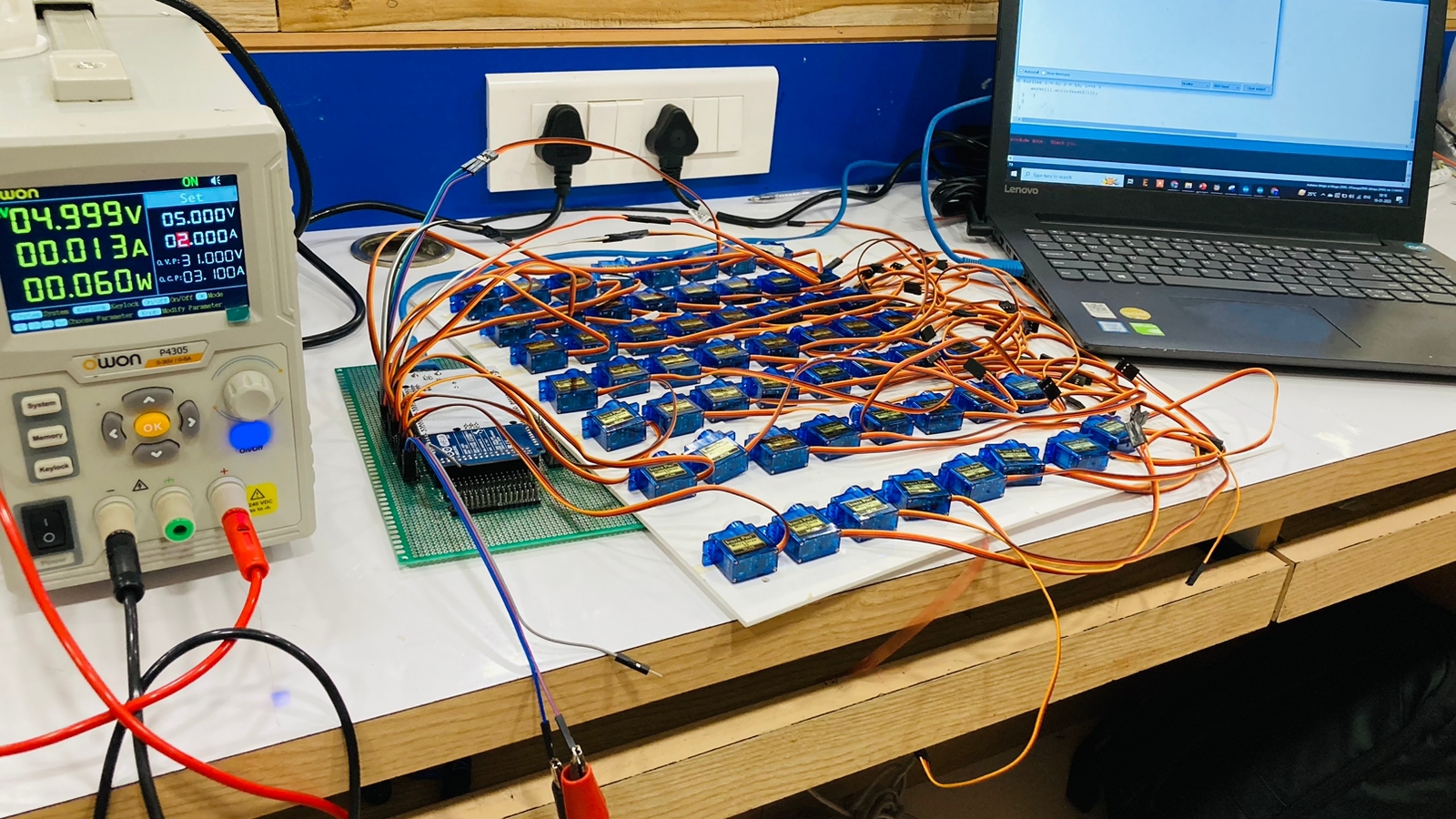

Encouraged by development in haptic technology, we have undertaken ‘Project Braille Tab’ – a prototype that triggers images of keywords extracted from audio. This brings stories and images to your fingerprints in real-time and brings the community one step closer to digital equality. Any keyword extracted from an audio can be made tactile for all users. We have taken great inspiration from children’s rebus stories which essentially, represent crucial words in a story and display it in a pictorial manner. Our model does exactly this but uses raised dots to display a shape or a picture. The Braille Tab is creating the tactile display that pushes through the boundaries and expands the possibilities of digital accessibility.